One of the most confounding concepts to emerge from the cauldron of early 20th-century physics was the idea that quantum objects can exist in multiple states simultaneously. A particle could be in many places at once, for example. The math and experimental results were unequivocal about it. And it seemed that the only way for a particle to go from such a “superposition” of states to a single state was for someone or something to observe it, causing the superposition to “collapse.” This bizarre situation raised profound questions about what constitutes an observation or even an observer. Does an observer merely discover the outcome of a collapse or cause it? Is there even an actual collapse? Can an observer be a single photon, or does it have to be a conscious human being?

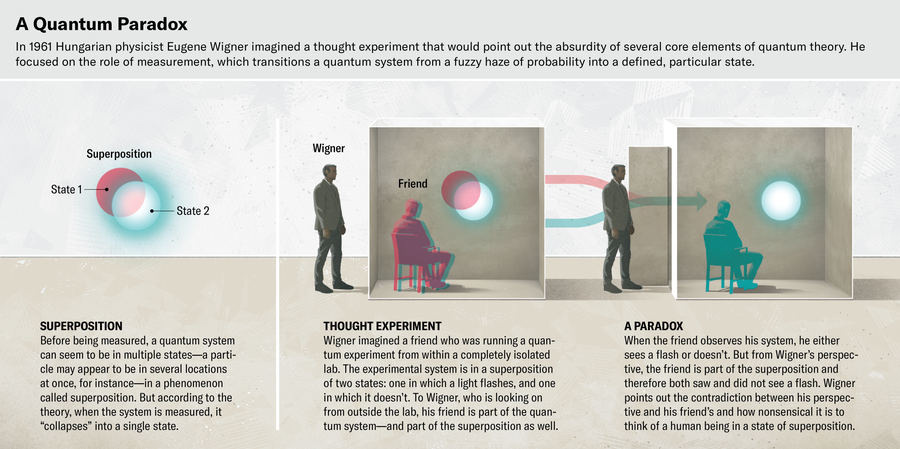

This last question was highlighted in 1961 by Hungarian physicist Eugene Wigner, who came up with a thought experiment involving himself and an imaginary friend. The friend is inside a fully isolated laboratory, making observations of a quantum system that’s in a superposition of two states: say, one that causes a flash of light and one that doesn’t. Wigner is outside, observing the entire lab. If there’s no interaction between the lab and the external world, the whole lab evolves according to the rules of quantum physics, and the experiment presents a contradiction between Wigner’s observations and those of his friend. The friend presumably perceives an actual result (flash or no flash), but Wigner must regard the friend and the lab as being in a superposition of states: one where a flash is produced and the friend sees it, and one where there is no flash and the friend does not see anything. (The friend’s state is not unlike that of Schrödinger’s cat while it is dead and alive at the same time.)

Eventually Wigner asks the friend what he saw, and the entire system supposedly collapses into one or the other state. Until then, the “friend was in a state of suspended animation before he answered,” Wigner wrote, pointing out the absurdity. A paradox was born.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Over the past decade physicists have proposed and performed limited versions of the experiment. Of course, they can’t carry it out as Wigner envisioned, because human beings can’t be put in superposition. But scientists have tested the idea by using photons—particles of light—in place of Wigner’s friend. In a basic sense, an “observation” is the interaction of the environment, or some outside system, with the system being observed. One simple observation is for a single photon to interact with the system. This interaction then puts the photon into a superposition of states, so that it carries information about the system it observed. These experiments proved that Wigner’s paradox is real, and to resolve it, physicists may have to give up some of their dearly held beliefs about objective reality. But single photons obviously fall short of human observers.

To understand the full implications of Wigner’s idea, scientists have dreamed up an observer that comes much closer to the original friend, albeit one that borders on science fiction. Howard M. Wiseman, director of the Center for Quantum Dynamics at Griffith University in Brisbane, Australia, and his colleagues imagine a futuristic “friend” that’s an artificial intelligence capable of humanlike thoughts. The AI would be built inside a quantum computer. Because the computations that give rise to such an AI’s thoughts would be quantum-mechanical, the AI would be in a superposition of having different thoughts at once (say, “I saw a flash” and “I did not see a flash”). Such an AI doesn’t exist yet, but scientists think it’s plausible. Even if they can’t carry out the experiment until the distant future, just thinking about this type of observer clarifies which elements of objective reality are at stake, and may have to be abandoned, in resolving Wigner’s paradox.

Matthew Twombly

Renato Renner, head of the research group for quantum information theory at ETH Zurich and someone who has also worked on the Wigner’s-friend paradox, is enthusiastic about this use of a quantum-mechanical AI. “Obviously, we cannot do a Wigner’s-friend experiment with real humans,” he says. “Whereas if you go to the other extreme and just do experiments with single [photons], that’s just not so convincing. [Wiseman and his team] tried to find this middle ground. And I think they did a very good job.”

Of course, it’s possible that an AI’s thoughts could never stand in for observations made by a human, in which case Wigner’s paradox will continue to haunt us. But if we agree that such an AI could be built, then detailing how such an experiment could be run helps to reveal something fundamental about the universe. It clarifies how we can determine who or what really counts as an observer and whether an observation collapses a superposition. It might even suggest that outcomes of measurements are relative to individual observers—and that there’s no absolute fact of the matter about the world we live in.

The proposed Wigner’s AI-friend experiment is a way of testing a so-called no-go theorem about some fundamental tenets of reality that may or may not be true. The proposal was devised by Wiseman, his Griffith University colleague Eric Cavalcanti and Eleanor Rieffel, who heads the Quantum Artificial Intelligence Laboratory at the NASA Ames Research Center in California. The theorem starts with a set of assumptions about physical reality, all of which seem extremely plausible.

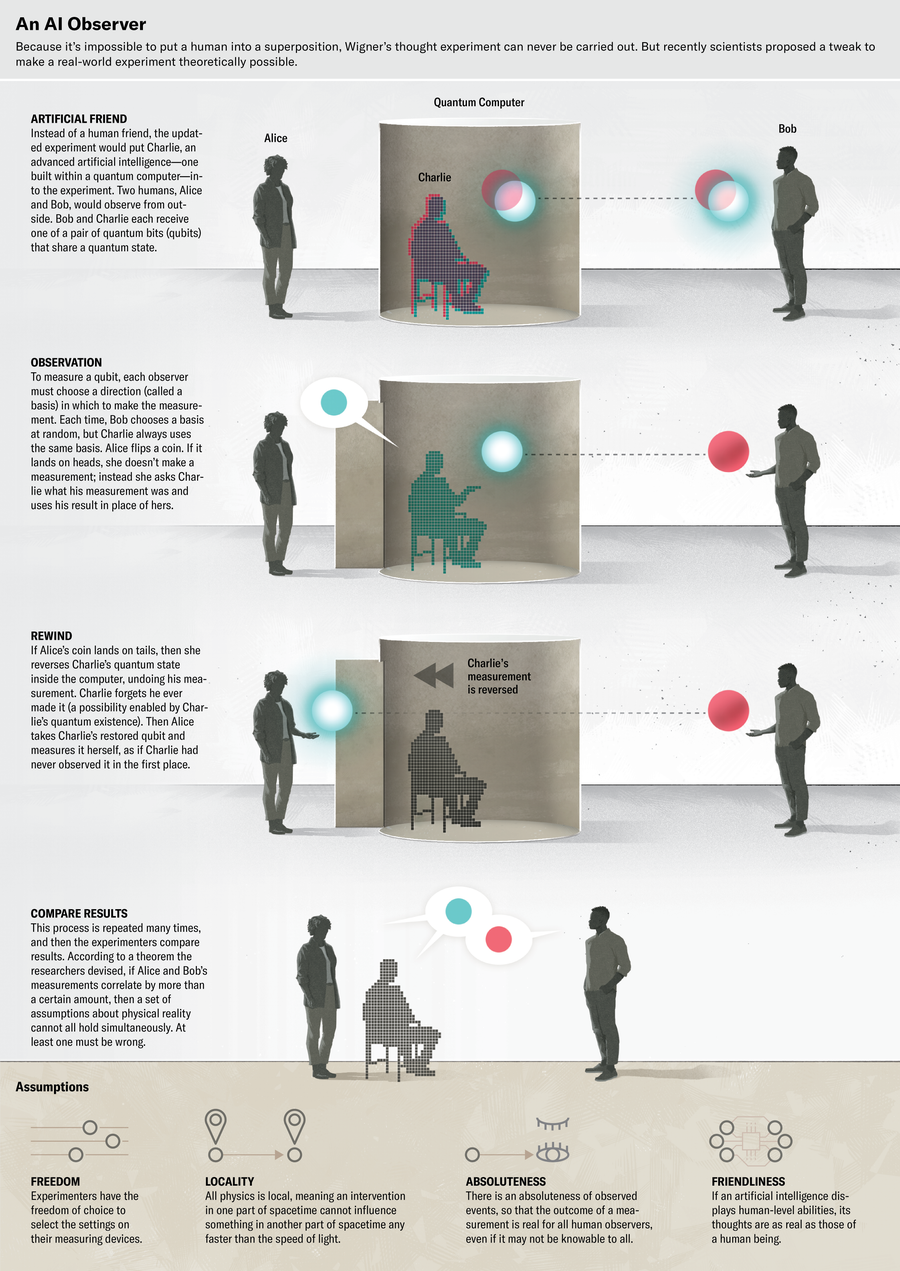

The first: we have the freedom to choose the settings on our measuring devices. The second: all physics is local, meaning an intervention in one part of spacetime cannot influence something in another part of spacetime any faster than the speed of light. The third: observed events are absolute such that the outcome of a measurement is real for all human observers even if it may not be knowable to all. In other words, if you toss a quantum coin and get one of two possible outcomes, say heads, then that fact holds for all observers; the same coin can’t appear to land on tails for some other observer.

With these assumptions in place, the researchers took on Wigner’s view of human consciousness. Wigner, in his musings, argued that consciousness must be dealt with differently than all else. To drive home this point in his thought experiment, he asks us to consider an atom as the “friend” inside the lab. When the atom interacts with, or measures, the particle, the entire system ends up in a superposition of the particle flashing and the atom absorbing this light and entering a higher energy state, and the particle not flashing and the atom remaining in its ground state. Only when Wigner examines the lab does the atom enter one or the other state.

This isn’t at all hard to accept. An atom can indeed remain in a superposition of states as long as it’s isolated. But if the friend is a human being, then Wigner’s perspective from outside the lab and the friend’s perspective from within the lab are at odds. Surely, the friend knows whether a flash has occurred even if Wigner has not perceived it yet. “It follows that the being with a consciousness must have a different role in quantum mechanics than the inanimate measuring device,” Wigner wrote. But does his argument make sense? Is a human observer fundamentally different than, say, an atom acting as an observer?

Wiseman, Cavalcanti and Rieffel tackled this question head-on by adding a fourth assumption, which they call the friendliness assumption. It stipulates that if an artificial intelligence displays human-level abilities, its thoughts are as real as those of a human. The friendliness assumption is explicit about what constitutes an observer: “it’s a system that would be as intelligent as we are,” Cavalcanti says.

The team settled on the notion of “intelligence” rather than “consciousness” after much debate. During their discussions Cavalcanti argued that intelligence is something that can be quantified. “There’s no possible test to determine whether or not anyone else is conscious, even a human being, let alone a computer,” he says. Therefore, if a human-level AI were built, it would be unclear whether it was conscious and it would also be possible to deny that it was. “But [it would be] much harder to deny that it’s intelligent,” Cavalcanti says.

After describing all these assumptions in detail, the researchers proved that their version of Wigner’s-friend experiment, were it to be done to a high accuracy using an AI based inside a quantum computer, would result in a contradiction. Their no-go theorem would then imply that at least one of the assumptions must be wrong. Physicists would have to give up one of their cherished notions of reality.

The no-go theorem can be tested only if scientists someday invent an AI that’s not just intelligent but capable of being put in a superposition—a requirement that necessitates a quantum computer. Unlike a classical computer, a quantum computer uses quantum bits, or qubits, which can exist in a superposition of two values. In classical computation, a circuit of logic gates turns some input bits into output bits, and the output is definitive. In quantum computers, the output can end up in a superposition of states, each state representing one possible result. It’s only when you query the quantum computer that the superposition of possible results is destroyed (according to traditional interpretations of quantum mechanics), producing a single output.

For their theorem, Wiseman, Cavalcanti and Rieffel assume that a powerful AI capable of human-level intelligence (a descendant of today’s ChatGPT, for example) can be implemented inside a quantum computer. They call this machine QUALL-E, after OpenAI’s image-generating AI DALL-E and Pixar’s robot WALL-E. The name also nods to the word “quale,” which refers to the quality of, say, the color red as perceived by a person. The team tried to figure out how feasible it is to develop an actual QUALL-E. This was Rieffel’s area of expertise.

Turning a futuristic classical AI algorithm into one that can work inside a quantum computer involves multiple steps. The first, Rieffel says, is to use well-established techniques to make the classical computation reversible. A reversible computation is one where the input bits in a logic circuit produce some output bits, and those output bits, when fed to the reversed logic circuit, reproduce the initial input bits. “Once you have a reversible classical algorithm, you can just immediately translate it to a quantum algorithm,” she says. If the classical algorithm is complex to start with, making it reversible adds considerable computing overhead. Still, you get an estimate of the required overall computing power. This stage reveals the approximate number of logical qubits necessary for the computation.

Another source of computing overhead is quantum-error correction. Qubits are fragile, and their superpositions can be destroyed by myriad elements in the environment, leading to errors in computation. Hence, quantum computers need additional qubits to keep track of accumulating errors and provide the necessary redundancy to get the computation back on track. In general, you need 1,000 physical qubits to do the work of one logical qubit. “That’s a big overhead,” Rieffel says.

Her initial estimates of the computation power needed for a quantum-mechanical, human-level AI, using the current capabilities of fault-tolerant quantum gates, were staggering: for thoughts that would take a human one second to think, QUALL-E would require more than 500 years. It’s clear that QUALL-E isn’t going to be built anytime soon. “It’s going to be decades and require a lot of innovations before something like this proposed experiment could be run,” Rieffel says.

But Rieffel and her collaborators are optimistic. Wiseman takes inspiration from how far classical gates have come in the 150 years since Charles Babbage invented his analytical engine, considered by many to be the first computer. “If quantum computers had the same type of trajectory for that length of time, [then] at some point in the future, I think, this is plausible,” Wiseman says. “In principle, I can’t see any reason that it can’t be done, but it is significantly harder than I had initially thought.”

Renner is also optimistic about the proposal. “It’s at least something we can technologically, in principle, achieve, in contrast to having real humans in superposition,” he says. Rieffel thinks smaller but still complex versions of QUALL-E that don’t necessarily display human-level intelligence could be built first. “It may be enough to do a nematode or something like that,” she says. “There are a lot of exciting possibilities between a single photon and the experiment we laid out.”

Let’s assume for now that one day QUALL-E will be built. When that happens, QUALL-E will play the role of Charlie, who sits between two human observers called Alice and Bob, in a Wigner’s-friend-type experiment. Charlie and his lab are quantum-mechanically isolated. All three entities must be far enough away from one another that no one’s choice of measurement can influence the outcomes of measurements made by the other two.

The experiment begins with a source of qubits. In this scenario, a qubit can be in some superposition of the values +1 and –1. Measuring a qubit involves specifying something called a basis—think of it as a direction. Using different measurement bases can yield different results. For example, measuring thousands of similarly prepared qubits in the “vertical” direction might produce equal numbers of +1 and –1 results. But with measurements in a basis that is at some angle to the vertical, you might observe +1 more often than –1, for example.

What constitutes an observer? Can an observer be a single photon, or does it have to be a conscious human being?

The experimenter begins by taking two qubits that are described by a single quantum state—such that measurements of the quantum state of each qubit in the same basis are always perfectly correlated—and sends one each to Bob and Charlie. Bob measures his qubit by choosing one of two bases at random. Charlie, however, always measures the qubit with the same basis. Meanwhile Alice flips a coin. If it comes up heads, she doesn’t make a measurement; instead she asks Charlie for the outcome of his measurement and uses that as if it were the result of her own measurement.

But if the coin comes up tails, Alice reverses everything that Charlie did in his lab. She can do this because Charlie is a fully isolated quantum system whose computation is reversible. This rewind includes wiping Charlie’s memory of having ever taken a measurement—an action that would be impossible with a human observer. But undoing the observation and the observer’s recollection of it is the only way to take the system back to its initial conditions and thereby retrieve Charlie’s qubit in its original, unmeasured state.

An important condition is that there is no communication between Charlie and Alice if she flips tails. The researchers emphasize that because Charlie is an AI agent capable of human-level thoughts, the experiment can be done only if “Charlie agreed to be in it,” Wiseman says. “He knows that he’s going to do something and then potentially that’s going to be undone.” Reversing the measurement and recovering the original qubit are crucial for Alice’s next step: she then observes Charlie’s qubit in a different basis than the one Charlie used.

Alice, Charlie and Bob carry out this entire process many times. The end result is that Bob’s measurements always represent his own observations, but Alice’s results are sometimes her own measurements (if she flips tails) and sometimes Charlie’s (if she flips heads). Therefore, her accumulated results represent a random mix of measurements taken by an outside observer and measurements made by an observer within the quantum superposition. This mix allows scientists to test whether the two kinds of observers see different things. At the end of the trials, Alice and Bob compare their results. Wiseman, Cavalcanti and Rieffel derived an equation to calculate the amount of correlation between Alice and Bob’s outcomes—essentially, a measurement of how often they agree.

Calculating the amount of correlation is difficult; it involves determining what value of the outcome you might expect for each measurement basis, based on the entire set of measurements, and then plugging those values into an equation. At the end of the process, the equation will spit out a number. If the number exceeds a certain threshold, the experiment will violate an inequality, suggesting a problem. Specifically, a violation of the inequality means the set of assumptions about physical reality the researchers built into their theorem cannot all hold simultaneously. At least one of them must be wrong.

Matthew Twombly

Because of the way the scientists set up their no-go theorem, this outcome is what most physicists expect. “I’m confident that such an experiment, if performed, would violate the inequalities,” says Jeffery Bub, a philosopher of physics and a professor emeritus at the University of Maryland, College Park.

If so, physicists would have to dump their least favorite assumption about physical reality. It wouldn’t be easy—all the assumptions are popular. The idea that physicists are free to choose their measurement settings, the notion of a universe that is local and obeys Albert Einstein’s laws, and the expectation that the outcome of a measurement one makes is true for all observers all seem sacrosanct on their face. “I think most physicists, if they were made to think about it, would want to hold on to all these assumptions,” Wiseman says. More physicists might be ready to doubt the friendliness assumption, though—the idea that “machine intelligence can entail genuine thoughts,” Wiseman says.

But if machines can have thoughts and the inequality is violated, then something must give. Adherents of different quantum theories or interpretations will point fingers at different assumptions as the source of the violation. Take Bohmian mechanics, developed by physicist David Bohm. This theory argues that there is a hidden, nonlocal reality behind our everyday experience of the world, allowing events here to instantaneously influence events elsewhere, regardless of the distance between them. Proponents of this idea would ditch the assumption of a local reality that obeys Einstein’s laws. In this new scenario, everything in the universe influences everything else, simultaneously, however weak the effect. The universe already has a small amount of nonlocality baked into it from quantum mechanics, although this quality still hews to Einstein’s view of a local universe. Given that this tiny amount of nonlocality is what allows for quantum communications and quantum cryptography, it’s nearly impossible to imagine the fallout of a profoundly nonlocal universe.

Then there are so-called objective collapse models of quantum physics, which argue that superpositions of states collapse randomly on their own, and measurement devices simply discover the outcome. Collapse theorists “would give up the idea that the quantum computer could simulate a human, or the friendliness assumption,” Renner says. They would believe a quantum computer, given enough qubits to completely compensate for any errors that arise in the computation, should protect the superposition indefinitely—it should never collapse. And if there’s no collapse, there’s nothing to observe.

Other physicists likely to give up on the friendliness assumption are those who adhere to the standard Copenhagen interpretation of quantum mechanics. This view argues that any measurement requires a hypothetical “Heisenberg cut”—a notional separator that divides the quantum system from the classical apparatus making measurements of it. Adherents of this interpretation “would deny the assumption that a universal quantum computer would ever be a valid agent because that computer remains in a superposition and therefore is on the quantum side of the Heisenberg cut,” Renner says. “It’s just on the wrong side to be an observer.” Such physicists would argue that the thoughts of an AI built inside a quantum computer are not a proxy for human thoughts. Bub is partial to this view. “I would reject the friendliness assumption in the context of such an experiment,” he says.

There’s a more striking alternative: give up the assumption about the absoluteness of observed events. Letting go of this one implies that observations of the same event lead to different outcomes depending on who’s doing the measuring (whether that observer is a conscious human, an AI or a photon). It’s a position that sits well with many-world theorists (those who follow a theory formulated by physicist Hugh Everett), who think superpositions never get truly destroyed—that when a measurement is made, each possible state branches off to manifest in a different world. They would question the absoluteness of observed events because in their theory, thoughts or observations are absolute in their respective worlds, not in all worlds.

Some interpretations of quantum physics argue that even if there’s only one world, the outcomes of measurements may still be relative to an observer rather than an objective fact for everyone. Renner, for one, is open to the idea that the result of a quantum coin toss might be simultaneously tails for one observer and heads for another. “We probably have to give up the absoluteness of observed events,” he says, “which I think really has very little justification in physics.” He points to Einstein’s theories of relativity as examples. When you measure the velocity of an object, it’s relative to your frame of reference. Someone with another frame of reference would have to perform a well-defined mathematical translation, given by the rules of relativity, to determine the same object’s velocity from their own point of view.

No such rules exist, however, to translate things in the quantum world from one observer’s point of view to another’s. “We have almost no clue what this rule should be at the moment,” Renner says. Scientists have largely avoided thinking about the observer until recently, he adds. “Only now are people starting to even ask that question, so it’s not surprising that it doesn’t yet have an answer.” As reasonable as this notion may sound to some physicists, it’s a pretty radical change from how most people see the world. If measurement outcomes are relative to observers, that calls into question the entire scientific enterprise, which depends on the objectivity of experimental findings. Physicists would have to figure out a way to translate between quantum reference frames, in addition to classical reference frames.

An even more striking result of the experiment would be if the inequality weren’t violated. Wiseman thinks that although there’s a very small chance of this happening, we can’t be sure it won’t. “By far, the most interesting thing would be if we tried to do this experiment and just can’t get a violation,” he says. “That’s huge.” It would mean the laws of physics are different from what physicists think they are—an outcome that’s an even bigger shake-up than giving up one of the assumptions in the theorem.

No matter what, if this Wigner’s-friend experiment ever comes to be, its implications will be a big deal. That’s why scientists are so interested in the idea, even though the AI and computing technology needed to carry it out are a ways off. “It hasn’t altered the fact that I still think of this as a serious proposal—not [just] pie in the sky,” Wiseman says. It’s “something that I really hope, even after I’m dead, experimenters are going to be motivated to try to achieve.”

If scientists generations hence pull off this feat, they will probably grasp something about the nature of quantum reality that has so far eluded the best of minds. It just might be that the status of experimental observations goes the way of much else in physics—from a vaunted position to nothing special. The Copernican revolution told us Earth is not the center of the solar system. Cosmologists now know that our galaxy is in no more distinctive a location than that of the 100 billion other galaxies out there. In much the same way, observed events could turn out to have no objective status. Everything could be relative down to the smallest scales.